The documentation you are viewing is for Dapr v1.13 which is an older version of Dapr. For up-to-date documentation, see the latest version.

Quickstart: Service-to-service resiliency

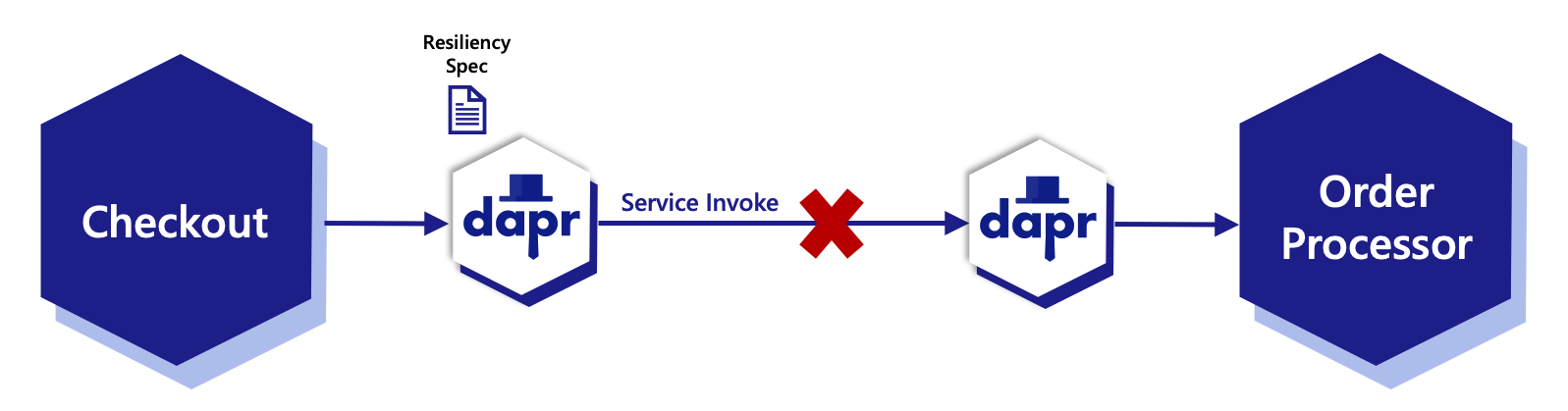

Observe Dapr resiliency capabilities by simulating a system failure. In this Quickstart, you will:

- Run two microservice applications:

checkoutandorder-processor.checkoutwill continuously make Dapr service invocation requests toorder-processor. - Trigger the resiliency spec by simulating a system failure.

- Remove the failure to allow the microservice application to recover.

Select your preferred language-specific Dapr SDK before proceeding with the Quickstart.

Pre-requisites

For this example, you will need:

Step 1: Set up the environment

Clone the sample provided in the Quickstarts repo.

git clone https://github.com/dapr/quickstarts.git

Step 2: Run order-processor service

In a terminal window, from the root of the Quickstart directory, navigate to order-processor directory.

cd service_invocation/python/http/order-processor

Install dependencies:

pip3 install -r requirements.txt

Run the order-processor service alongside a Dapr sidecar.

dapr run --app-port 8001 --app-id order-processor --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3501 -- python3 app.py

Step 3: Run the checkout service application

In a new terminal window, from the root of the Quickstart directory, navigate to the checkout directory.

cd service_invocation/python/http/checkout

Install dependencies:

pip3 install -r requirements.txt

Run the checkout service alongside a Dapr sidecar.

dapr run --app-id checkout --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3500 -- python3 app.py

The Dapr sidecar then loads the resiliency spec located in the resources directory:

apiVersion: dapr.io/v1alpha1

kind: Resiliency

metadata:

name: myresiliency

scopes:

- checkout

spec:

policies:

retries:

retryForever:

policy: constant

maxInterval: 5s

maxRetries: -1

circuitBreakers:

simpleCB:

maxRequests: 1

timeout: 5s

trip: consecutiveFailures >= 5

targets:

apps:

order-processor:

retry: retryForever

circuitBreaker: simpleCB

Step 4: View the Service Invocation outputs

When both services and sidecars are running, notice how orders are passed from the checkout service to the order-processor service using Dapr service invoke.

checkout service output:

== APP == Order passed: {"orderId": 1}

== APP == Order passed: {"orderId": 2}

== APP == Order passed: {"orderId": 3}

== APP == Order passed: {"orderId": 4}

order-processor service output:

== APP == Order received: {"orderId": 1}

== APP == Order received: {"orderId": 2}

== APP == Order received: {"orderId": 3}

== APP == Order received: {"orderId": 4}

Step 5: Introduce a fault

Simulate a fault by stopping the order-processor service. Once the instance is stopped, service invoke operations from the checkout service begin to fail.

Since the resiliency.yaml spec defines the order-processor service as a resiliency target, all failed requests will apply retry and circuit breaker policies:

targets:

apps:

order-processor:

retry: retryForever

circuitBreaker: simpleCB

In the order-processor window, stop the service:

CTRL + C

Once the first request fails, the retry policy titled retryForever is applied:

INFO[0005] Error processing operation endpoint[order-processor, order-processor:orders]. Retrying...

Retries will continue for each failed request indefinitely, in 5 second intervals.

retryForever:

policy: constant

maxInterval: 5s

maxRetries: -1

Once 5 consecutive retries have failed, the circuit breaker policy, simpleCB, is tripped and the breaker opens, halting all requests:

INFO[0025] Circuit breaker "order-processor:orders" changed state from closed to open

circuitBreakers:

simpleCB:

maxRequests: 1

timeout: 5s

trip: consecutiveFailures >= 5

After 5 seconds has surpassed, the circuit breaker will switch to a half-open state, allowing one request through to verify if the fault has been resolved. If the request continues to fail, the circuit will trip back to the open state.

INFO[0030] Circuit breaker "order-processor:orders" changed state from open to half-open

INFO[0030] Circuit breaker "order-processor:orders" changed state from half-open to open

INFO[0030] Circuit breaker "order-processor:orders" changed state from open to half-open

INFO[0030] Circuit breaker "order-processor:orders" changed state from half-open to open

This half-open/open behavior will continue for as long as the order-processor service is stopped.

Step 6: Remove the fault

Once you restart the order-processor service, the application will recover seamlessly, picking up where it left off with accepting order requests.

In the order-processor service terminal, restart the application:

dapr run --app-port 8001 --app-id order-processor --app-protocol http --dapr-http-port 3501 -- python3 app.py

checkout service output:

== APP == Order passed: {"orderId": 5}

== APP == Order passed: {"orderId": 6}

== APP == Order passed: {"orderId": 7}

== APP == Order passed: {"orderId": 8}

== APP == Order passed: {"orderId": 9}

== APP == Order passed: {"orderId": 10}

order-processor service output:

== APP == Order received: {"orderId": 5}

== APP == Order received: {"orderId": 6}

== APP == Order received: {"orderId": 7}

== APP == Order received: {"orderId": 8}

== APP == Order received: {"orderId": 9}

== APP == Order received: {"orderId": 10}

Pre-requisites

For this example, you will need:

Step 1: Set up the environment

Clone the sample provided in the Quickstarts repo.

git clone https://github.com/dapr/quickstarts.git

Step 2: Run the order-processor service

In a terminal window, from the root of the Quickstart directory,

navigate to order-processor directory.

cd service_invocation/javascript/http/order-processor

Install dependencies:

npm install

Run the order-processor service alongside a Dapr sidecar.

dapr run --app-port 5001 --app-id order-processor --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3501 -- npm start

Step 3: Run the checkout service application

In a new terminal window, from the root of the Quickstart directory,

navigate to the checkout directory.

cd service_invocation/javascript/http/checkout

Install dependencies:

npm install

Run the checkout service alongside a Dapr sidecar.

dapr run --app-id checkout --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3500 -- npm start

The Dapr sidecar then loads the resiliency spec located in the resources directory:

apiVersion: dapr.io/v1alpha1

kind: Resiliency

metadata:

name: myresiliency

scopes:

- checkout

spec:

policies:

retries:

retryForever:

policy: constant

maxInterval: 5s

maxRetries: -1

circuitBreakers:

simpleCB:

maxRequests: 1

timeout: 5s

trip: consecutiveFailures >= 5

targets:

apps:

order-processor:

retry: retryForever

circuitBreaker: simpleCB

Step 4: View the Service Invocation outputs

When both services and sidecars are running, notice how orders are passed from the checkout service to the order-processor service using Dapr service invoke.

checkout service output:

== APP == Order passed: {"orderId": 1}

== APP == Order passed: {"orderId": 2}

== APP == Order passed: {"orderId": 3}

== APP == Order passed: {"orderId": 4}

order-processor service output:

== APP == Order received: {"orderId": 1}

== APP == Order received: {"orderId": 2}

== APP == Order received: {"orderId": 3}

== APP == Order received: {"orderId": 4}

Step 5: Introduce a fault

Simulate a fault by stopping the order-processor service. Once the instance is stopped, service invoke operations from the checkout service begin to fail.

Since the resiliency.yaml spec defines the order-processor service as a resiliency target, all failed requests will apply retry and circuit breaker policies:

targets:

apps:

order-processor:

retry: retryForever

circuitBreaker: simpleCB

In the order-processor window, stop the service:

CMD + C

CTRL + C

Once the first request fails, the retry policy titled retryForever is applied:

INFO[0005] Error processing operation endpoint[order-processor, order-processor:orders]. Retrying...

Retries will continue for each failed request indefinitely, in 5 second intervals.

retryForever:

policy: constant

maxInterval: 5s

maxRetries: -1

Once 5 consecutive retries have failed, the circuit breaker policy, simpleCB, is tripped and the breaker opens, halting all requests:

INFO[0025] Circuit breaker "order-processor:orders" changed state from closed to open

circuitBreakers:

simpleCB:

maxRequests: 1

timeout: 5s

trip: consecutiveFailures >= 5

After 5 seconds has surpassed, the circuit breaker will switch to a half-open state, allowing one request through to verify if the fault has been resolved. If the request continues to fail, the circuit will trip back to the open state.

INFO[0030] Circuit breaker "order-processor:orders" changed state from open to half-open

INFO[0030] Circuit breaker "order-processor:orders" changed state from half-open to open

INFO[0030] Circuit breaker "order-processor:orders" changed state from open to half-open

INFO[0030] Circuit breaker "order-processor:orders" changed state from half-open to open

This half-open/open behavior will continue for as long as the Redis container is stopped.

Step 6: Remove the fault

Once you restart the order-processor service, the application will recover seamlessly, picking up where it left off.

In the order-processor service terminal, restart the application:

dapr run --app-port 5001 --app-id order-processor --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3501 -- npm start

checkout service output:

== APP == Order passed: {"orderId": 5}

== APP == Order passed: {"orderId": 6}

== APP == Order passed: {"orderId": 7}

== APP == Order passed: {"orderId": 8}

== APP == Order passed: {"orderId": 9}

== APP == Order passed: {"orderId": 10}

order-processor service output:

== APP == Order received: {"orderId": 5}

== APP == Order received: {"orderId": 6}

== APP == Order received: {"orderId": 7}

== APP == Order received: {"orderId": 8}

== APP == Order received: {"orderId": 9}

== APP == Order received: {"orderId": 10}

Pre-requisites

For this example, you will need:

Step 1: Set up the environment

Clone the sample provided in the Quickstarts repo.

git clone https://github.com/dapr/quickstarts.git

Step 2: Run the order-processor service

In a terminal window, from the root of the Quickstart directory,

navigate to order-processor directory.

cd service_invocation/csharp/http/order-processor

Install dependencies:

dotnet restore

dotnet build

Run the order-processor service alongside a Dapr sidecar.

dapr run --app-port 7001 --app-id order-processor --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3501 -- dotnet run

Step 3: Run the checkout service application

In a new terminal window, from the root of the Quickstart directory,

navigate to the checkout directory.

cd service_invocation/csharp/http/checkout

Install dependencies:

dotnet restore

dotnet build

Run the checkout service alongside a Dapr sidecar.

dapr run --app-id checkout --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3500 -- dotnet run

The Dapr sidecar then loads the resiliency spec located in the resources directory:

apiVersion: dapr.io/v1alpha1

kind: Resiliency

metadata:

name: myresiliency

scopes:

- checkout

spec:

policies:

retries:

retryForever:

policy: constant

maxInterval: 5s

maxRetries: -1

circuitBreakers:

simpleCB:

maxRequests: 1

timeout: 5s

trip: consecutiveFailures >= 5

targets:

apps:

order-processor:

retry: retryForever

circuitBreaker: simpleCB

Step 4: View the Service Invocation outputs

When both services and sidecars are running, notice how orders are passed from the checkout service to the order-processor service using Dapr service invoke.

checkout service output:

== APP == Order passed: {"orderId": 1}

== APP == Order passed: {"orderId": 2}

== APP == Order passed: {"orderId": 3}

== APP == Order passed: {"orderId": 4}

order-processor service output:

== APP == Order received: {"orderId": 1}

== APP == Order received: {"orderId": 2}

== APP == Order received: {"orderId": 3}

== APP == Order received: {"orderId": 4}

Step 5: Introduce a fault

Simulate a fault by stopping the order-processor service. Once the instance is stopped, service invoke operations from the checkout service begin to fail.

Since the resiliency.yaml spec defines the order-processor service as a resiliency target, all failed requests will apply retry and circuit breaker policies:

targets:

apps:

order-processor:

retry: retryForever

circuitBreaker: simpleCB

In the order-processor window, stop the service:

CMD + C

CTRL + C

Once the first request fails, the retry policy titled retryForever is applied:

INFO[0005] Error processing operation endpoint[order-processor, order-processor:orders]. Retrying...

Retries will continue for each failed request indefinitely, in 5 second intervals.

retryForever:

policy: constant

maxInterval: 5s

maxRetries: -1

Once 5 consecutive retries have failed, the circuit breaker policy, simpleCB, is tripped and the breaker opens, halting all requests:

INFO[0025] Circuit breaker "order-processor:orders" changed state from closed to open

circuitBreakers:

simpleCB:

maxRequests: 1

timeout: 5s

trip: consecutiveFailures >= 5

After 5 seconds has surpassed, the circuit breaker will switch to a half-open state, allowing one request through to verify if the fault has been resolved. If the request continues to fail, the circuit will trip back to the open state.

INFO[0030] Circuit breaker "order-processor:orders" changed state from open to half-open

INFO[0030] Circuit breaker "order-processor:orders" changed state from half-open to open

INFO[0030] Circuit breaker "order-processor:orders" changed state from open to half-open

INFO[0030] Circuit breaker "order-processor:orders" changed state from half-open to open

This half-open/open behavior will continue for as long as the Redis container is stopped.

Step 6: Remove the fault

Once you restart the order-processor service, the application will recover seamlessly, picking up where it left off.

In the order-processor service terminal, restart the application:

dapr run --app-port 7001 --app-id order-processor --app-protocol http --dapr-http-port 3501 -- dotnet run

checkout service output:

== APP == Order passed: {"orderId": 5}

== APP == Order passed: {"orderId": 6}

== APP == Order passed: {"orderId": 7}

== APP == Order passed: {"orderId": 8}

== APP == Order passed: {"orderId": 9}

== APP == Order passed: {"orderId": 10}

order-processor service output:

== APP == Order received: {"orderId": 5}

== APP == Order received: {"orderId": 6}

== APP == Order received: {"orderId": 7}

== APP == Order received: {"orderId": 8}

== APP == Order received: {"orderId": 9}

== APP == Order received: {"orderId": 10}

Pre-requisites

For this example, you will need:

- Dapr CLI and initialized environment.

- Java JDK 17 (or greater):

- Oracle JDK, or

- OpenJDK

- Apache Maven, version 3.x.

Step 1: Set up the environment

Clone the sample provided in the Quickstarts repo.

git clone https://github.com/dapr/quickstarts.git

Step 2: Run the order-processor service

In a terminal window, from the root of the Quickstart directory,

navigate to order-processor directory.

cd service_invocation/java/http/order-processor

Install dependencies:

mvn clean install

Run the order-processor service alongside a Dapr sidecar.

dapr run --app-id order-processor --resources-path ../../../resources/ --app-port 9001 --app-protocol http --dapr-http-port 3501 -- java -jar target/OrderProcessingService-0.0.1-SNAPSHOT.jar

Step 3: Run the checkout service application

In a new terminal window, from the root of the Quickstart directory,

navigate to the checkout directory.

cd service_invocation/java/http/checkout

Install dependencies:

mvn clean install

Run the checkout service alongside a Dapr sidecar.

dapr run --app-id checkout --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3500 -- java -jar target/CheckoutService-0.0.1-SNAPSHOT.jar

The Dapr sidecar then loads the resiliency spec located in the resources directory:

apiVersion: dapr.io/v1alpha1

kind: Resiliency

metadata:

name: myresiliency

scopes:

- checkout

spec:

policies:

retries:

retryForever:

policy: constant

maxInterval: 5s

maxRetries: -1

circuitBreakers:

simpleCB:

maxRequests: 1

timeout: 5s

trip: consecutiveFailures >= 5

targets:

apps:

order-processor:

retry: retryForever

circuitBreaker: simpleCB

Step 4: View the Service Invocation outputs

When both services and sidecars are running, notice how orders are passed from the checkout service to the order-processor service using Dapr service invoke.

checkout service output:

== APP == Order passed: {"orderId": 1}

== APP == Order passed: {"orderId": 2}

== APP == Order passed: {"orderId": 3}

== APP == Order passed: {"orderId": 4}

order-processor service output:

== APP == Order received: {"orderId": 1}

== APP == Order received: {"orderId": 2}

== APP == Order received: {"orderId": 3}

== APP == Order received: {"orderId": 4}

Step 5: Introduce a fault

Simulate a fault by stopping the order-processor service. Once the instance is stopped, service invoke operations from the checkout service begin to fail.

Since the resiliency.yaml spec defines the order-processor service as a resiliency target, all failed requests will apply retry and circuit breaker policies:

targets:

apps:

order-processor:

retry: retryForever

circuitBreaker: simpleCB

In the order-processor window, stop the service:

CMD + C

CTRL + C

Once the first request fails, the retry policy titled retryForever is applied:

INFO[0005] Error processing operation endpoint[order-processor, order-processor:orders]. Retrying...

Retries will continue for each failed request indefinitely, in 5 second intervals.

retryForever:

policy: constant

maxInterval: 5s

maxRetries: -1

Once 5 consecutive retries have failed, the circuit breaker policy, simpleCB, is tripped and the breaker opens, halting all requests:

INFO[0025] Circuit breaker "order-processor:orders" changed state from closed to open

circuitBreakers:

simpleCB:

maxRequests: 1

timeout: 5s

trip: consecutiveFailures >= 5

After 5 seconds has surpassed, the circuit breaker will switch to a half-open state, allowing one request through to verify if the fault has been resolved. If the request continues to fail, the circuit will trip back to the open state.

INFO[0030] Circuit breaker "order-processor:orders" changed state from open to half-open

INFO[0030] Circuit breaker "order-processor:orders" changed state from half-open to open

INFO[0030] Circuit breaker "order-processor:orders" changed state from open to half-open

INFO[0030] Circuit breaker "order-processor:orders" changed state from half-open to open

This half-open/open behavior will continue for as long as the Redis container is stopped.

Step 6: Remove the fault

Once you restart the order-processor service, the application will recover seamlessly, picking up where it left off.

In the order-processor service terminal, restart the application:

dapr run --app-id order-processor --resources-path ../../../resources/ --app-port 9001 --app-protocol http --dapr-http-port 3501 -- java -jar target/OrderProcessingService-0.0.1-SNAPSHOT.jar

checkout service output:

== APP == Order passed: {"orderId": 5}

== APP == Order passed: {"orderId": 6}

== APP == Order passed: {"orderId": 7}

== APP == Order passed: {"orderId": 8}

== APP == Order passed: {"orderId": 9}

== APP == Order passed: {"orderId": 10}

order-processor service output:

== APP == Order received: {"orderId": 5}

== APP == Order received: {"orderId": 6}

== APP == Order received: {"orderId": 7}

== APP == Order received: {"orderId": 8}

== APP == Order received: {"orderId": 9}

== APP == Order received: {"orderId": 10}

Pre-requisites

For this example, you will need:

Step 1: Set up the environment

Clone the sample provided in the Quickstarts repo.

git clone https://github.com/dapr/quickstarts.git

Step 2: Run the order-processor service

In a terminal window, from the root of the Quickstart directory,

navigate to order-processor directory.

cd service_invocation/go/http/order-processor

Install dependencies:

go build .

Run the order-processor service alongside a Dapr sidecar.

dapr run --app-port 6001 --app-id order-processor --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3501 -- go run .

Step 3: Run the checkout service application

In a new terminal window, from the root of the Quickstart directory,

navigate to the checkout directory.

cd service_invocation/go/http/checkout

Install dependencies:

go build .

Run the checkout service alongside a Dapr sidecar.

dapr run --app-id checkout --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3500 -- go run .

The Dapr sidecar then loads the resiliency spec located in the resources directory:

apiVersion: dapr.io/v1alpha1

kind: Resiliency

metadata:

name: myresiliency

scopes:

- checkout

spec:

policies:

retries:

retryForever:

policy: constant

maxInterval: 5s

maxRetries: -1

circuitBreakers:

simpleCB:

maxRequests: 1

timeout: 5s

trip: consecutiveFailures >= 5

targets:

apps:

order-processor:

retry: retryForever

circuitBreaker: simpleCB

Step 4: View the Service Invocation outputs

When both services and sidecars are running, notice how orders are passed from the checkout service to the order-processor service using Dapr service invoke.

checkout service output:

== APP == Order passed: {"orderId": 1}

== APP == Order passed: {"orderId": 2}

== APP == Order passed: {"orderId": 3}

== APP == Order passed: {"orderId": 4}

order-processor service output:

== APP == Order received: {"orderId": 1}

== APP == Order received: {"orderId": 2}

== APP == Order received: {"orderId": 3}

== APP == Order received: {"orderId": 4}

Step 5: Introduce a fault

Simulate a fault by stopping the order-processor service. Once the instance is stopped, service invoke operations from the checkout service begin to fail.

Since the resiliency.yaml spec defines the order-processor service as a resiliency target, all failed requests will apply retry and circuit breaker policies:

targets:

apps:

order-processor:

retry: retryForever

circuitBreaker: simpleCB

In the order-processor window, stop the service:

CMD + C

CTRL + C

Once the first request fails, the retry policy titled retryForever is applied:

INFO[0005] Error processing operation endpoint[order-processor, order-processor:orders]. Retrying...

Retries will continue for each failed request indefinitely, in 5 second intervals.

retryForever:

policy: constant

maxInterval: 5s

maxRetries: -1

Once 5 consecutive retries have failed, the circuit breaker policy, simpleCB, is tripped and the breaker opens, halting all requests:

INFO[0025] Circuit breaker "order-processor:orders" changed state from closed to open

circuitBreakers:

simpleCB:

maxRequests: 1

timeout: 5s

trip: consecutiveFailures >= 5

After 5 seconds has surpassed, the circuit breaker will switch to a half-open state, allowing one request through to verify if the fault has been resolved. If the request continues to fail, the circuit will trip back to the open state.

INFO[0030] Circuit breaker "order-processor:orders" changed state from open to half-open

INFO[0030] Circuit breaker "order-processor:orders" changed state from half-open to open

INFO[0030] Circuit breaker "order-processor:orders" changed state from open to half-open

INFO[0030] Circuit breaker "order-processor:orders" changed state from half-open to open

This half-open/open behavior will continue for as long as the Redis container is stopped.

Step 6: Remove the fault

Once you restart the order-processor service, the application will recover seamlessly, picking up where it left off.

In the order-processor service terminal, restart the application:

dapr run --app-port 6001 --app-id order-processor --resources-path ../../../resources/ --app-protocol http --dapr-http-port 3501 -- go run .

checkout service output:

== APP == Order passed: {"orderId": 5}

== APP == Order passed: {"orderId": 6}

== APP == Order passed: {"orderId": 7}

== APP == Order passed: {"orderId": 8}

== APP == Order passed: {"orderId": 9}

== APP == Order passed: {"orderId": 10}

order-processor service output:

== APP == Order received: {"orderId": 5}

== APP == Order received: {"orderId": 6}

== APP == Order received: {"orderId": 7}

== APP == Order received: {"orderId": 8}

== APP == Order received: {"orderId": 9}

== APP == Order received: {"orderId": 10}

Tell us what you think!

We’re continuously working to improve our Quickstart examples and value your feedback. Did you find this quickstart helpful? Do you have suggestions for improvement?

Join the discussion in our discord channel.

Next steps

Visit this link for more information about Dapr resiliency.

Explore Dapr tutorials >>Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.